Main Content

Research

Motivation

How do intentional states come about in our minds? How do they translate into sensorimotor programs to reach our intended goals? How do our brains represent the knowledge that both dogs and birds are animals, or that driving a car is a means to get from point A to point B? More generally speaking, how do entities and actions come to have meaning? How do we reduce the computational effort required to turn an abstract intention into a concrete, high-dimensional motor control program for our overarticulated bodies? Conversely, how do we infer a probable intention from the high-dimensional motor output of our fellow human beings?

If we understood how the brain represents such relational information on different levels of the cortical hierarchy, we would be able to bride the gap between mostly sensory-driven, bottom-up approaches in Computer Vision and Machine Learning on the one hand, and semantic-level, logical AI approaches (such as Markov logic or Bayesian logic programs) on the other hand. A similar gap exists in computational motor control between movement primitives and optimal control approaches on the one hand, and grammar-based action representation on the other.

Answering these fundamental cognitive questions would have several important applications. Besides behavior analysis and prediction in technical systems (e.g. driver assistance, recommender systems), it might enable us to design assistive technology for patients with certain degenerative diseases, e.g. semantic dementia (visual associative agnosia, Alzheimer’s, Apraxia). Furthermore, applications in movement science context (personalised training, rehabilitation) are conceivable.

Inhalt ausklappen Inhalt einklappen Previous work

I postulate, with (Hommel et al., 2001), that there is a common code which governs both movement production and perception on different levels of the mind’s sensorimotor hierarchy. One of the advantages of such a common code is the ability to understand actions by predicting their (perceptual) effects, evidence for the existence of a neural code with this property has been described by Press et al. (2011). To keep the computational effort in applications of this idea manageable, we developed fast Bayesian machine learning approaches to compare and validate different movement representations (Velychko et al., 2018; Chiovetto et al., 2018; Endres et al., 2013).

These representations are based on primitives, i.e. short, modular and recombinable movement programs. Our data indicate that human movement perception is nearly Bayes-optimal. We were also able to show that these representations are not only perceptually predictive, but also suitable for the production of dynamically stable gait of a humanoid robot Clever et al. (2017, 2016). For the study of object semantics, I used Formal Concept Analysis (FCA), a mathematical framework from the theory of ordered sets, for semantic neural decoding: instead of just trying to figure out which stimulus was presented (’classical’ neural decoding), I demonstrated how to explore the semantic relationships (e.g. hierarchies, product-of-expert like coding) in the neural representation of large sets of stimuli, both in neurophysiological data (Endres et al., 2010; Endres and Földiák, 2009), and in human fMRI recordings (Endres et al., 2012) (see fig. 1). Both of these projects required me to develop novel machine learning approaches for discovering latent symbols in neural representations.

Current research

Inhalt ausklappen Inhalt einklappen First research direction

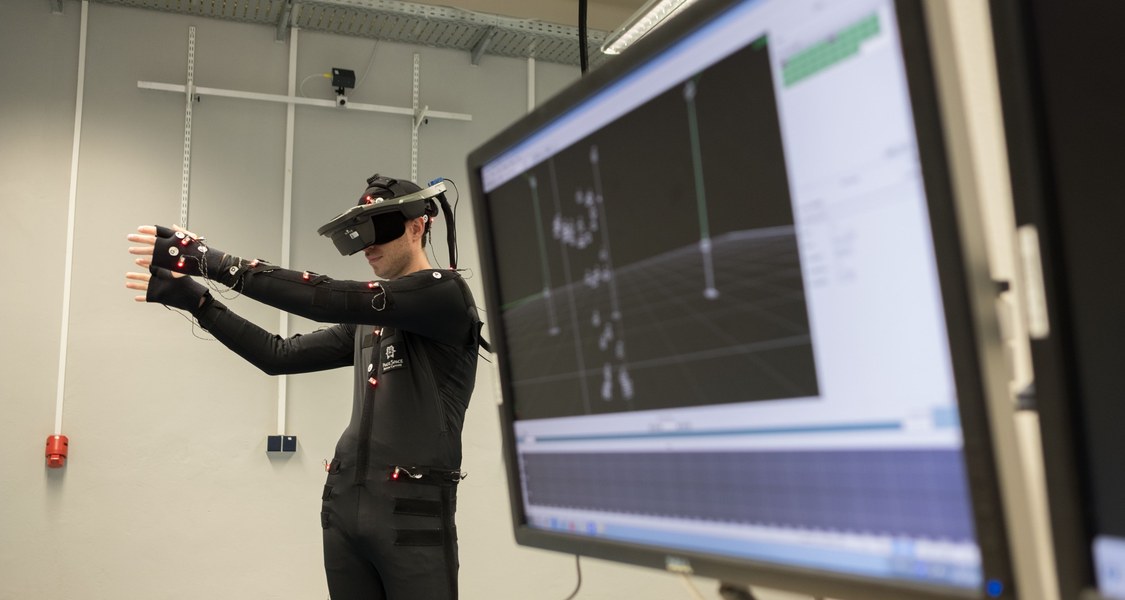

Sensory-motor primitives and hierarchies. I will extend my Bayesian movement primitive comparison framework (Endres et al., 2013) to support sensorimotor primitives, to investigate the postulated direct coupling between perception and movement. I will ensure high external validity by recording large databases of human activities in natural and naturalistic (VR) environments. Data will comprise movements (body and eyes) as well as synchronously recorded sensory signals (visual, haptic). I expect that representations with a similar degree of compactness and sparseness as our previous movement primitives will emerge. Subsequently, I plan to model the more abstract levels of the sensorimotor hierarchy, until the intention representation is obtained.

Inhalt ausklappen Inhalt einklappen Second research direction

Relational coding in perception and machine learning. I intend to continue to develop my models about relational representation in the mind/brain. These model will be tested through a combination of behavioral and possibly fMRI experiments (with interested collaborators). Furthermore, I will investigate how such relationships are learned from data, using natural and synthetic stimuli with controllable structure generated by computer graphics methods (Velychko et al., 2018; Knopp et al., 2019). For validation of the results and technical applicability, I will develop a unified machine learning framework for conceptual learning by fusing probability theory with FCA, in the context of stochastic process learning. This will allow me to connect conceptual, high-level relational structures to low-level representations, e.g. sensory-motor or purely visual feature hierarchies, thereby closing the neuro-symbolic gap. My work is a contribution to the deep model structure learning efforts which are currently of great interest to the Machine Learning community.