Main Content

Visual information sampling and attentional control

Humans are usually quite efficient in attentional selection: they manage to focus their processing capacity on relevant information and can ignore a vast amount of non-relevant information. However, most of the knowledge gained on attentional selection stems from experiments with simplified stimuli, predefined targets, and distractors. Are similar mechanisms at work when humans sample visual information in natural, unstructured environments? In real-world scenarios, humans often perform several tasks simultaneously and have to decide which signals are relevant or might become relevant in the near future. How do humans decide where to attend and how do they interpret their visual environment to optimize their behavior?

We investigate how humans adapt their attentional control settings to optimize information sampling under dynamically changing conditions. We want to learn more about the (strategic) factors that influence this adaptation.

In particular, our projects focus on

- Visual Foraging as a tool for understanding attentional strategies [learn more]

- Interaction of Task Demands and Object Properties in Naturalistic Environments [learn more]

- Target choice in dynamically changing environments [learn more]

- Context learning and visual attention [learn more]

Visual foraging as a tool for understanding attentional strategies

Principal investigators: Jan Tünnermann, Kevin Hartung, Anna Schubö

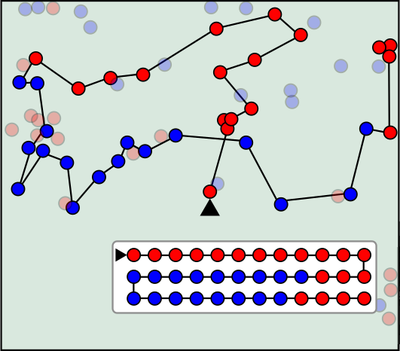

For humans and other organisms, visual foraging is an important behavior to acquire resources (e.g. food). Active vision and selective visual attention facilitates selecting target objects, especially in crowded scenes. Therefore, computer-based virtual foraging tasks provide a valuable tool to assess attentional control. Selection histories in multi-item displays reveal how participants influence their attention to trade off various strategic factors.

For instance, switching search templates appears to require some effort: Experiments conducted in the 1970ies showed that chicken tend to pick grains of the same color over a longer period, even if the grains are equally tasty as (differently colored) alternatives. We conduct computer-based experiments to investigate visual foraging and attentional strategies in humans. In these experiments participants typically have to select target items and avoid distractor items under continuously changing conditions (e.g., gradual change of target—distractor similarity or frequency). Participants forage using touch screen responses, a virtual stylus, or eye movements.

Related literature:

Tünnermann, J., Chelazzi, L., & Schubö, A. (2021). How feature context alters attentional template switching. Journal of Experimental Psychology: Human Perception and Performance, 47(11), 1431–1444.

Grössle, I. M., Schubö, A., & Tünnermann, J. (2023). Testing a relational account of search templates in visual foraging. Scientific Reports, 13(1), 12541.

Bergmann, N., Tünnermann, J., & Schubö, A. (2019). Which search are you on? Adapting to color while searching for shape. Attention, Perception & Psychophysics, 16(3).

Interaction of Task Demands and Object Properties in Naturalistic Environments

Principal investigators: Parishad Bromandnia, Jan Tünnermann, Anna Schubö

Foraging has often been studied in controlled experimental settings using highly simplified stimuli, such as colored shapes. However, recent research began to explore visual attention in more naturalistic environments, incorporating real-world stimuli such as LEGO® bricks (Sauter et al., 2020). In this project, we investigate attentional mechanisms in simulated real-world conditions, depending on varying features and task complexities. This approach aims to improve our understanding of interactive behavior with complex stimuli in everyday scenarios (Tünnermann et al., 2022).

Related literature:

Sauter, M., Stefani, M., & Mack, W. (2020). Towards interactive search: Investigating visual search in a novel real-world paradigm. Brain Sciences, 10(12). https://doi.org/10.3390/brainsci10120927

Tünnermann, J., Kristjánsson, Á., Petersen, A., Schubö, A., & Scharlau, I. (2022). Advances in the application of a computational Theory of Visual Attention (TVA): Moving towards more naturalistic stimuli and game-like tasks. Open Psychology, 4(1). https://doi.org/10.1515/psych-2022-0002

Target choice in dynamically changing environments

Method: Behavioral & Eye-Tracking

Project: Target choice in dynamically changing environments

Contact: Yunyun Mu, Jan Tünnermann, Anna Schubö

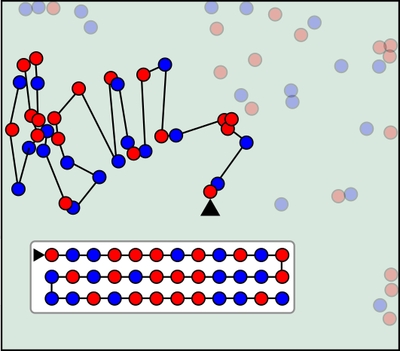

Abstract: Intentions and external stimuli in the environment guide human visual attention. When the visual environment changes gradually, observers can potentially adjust to regularities in these changes and make strategic use of them. For instance, if observers can choose between different targets (e.g., a red one or a blue one), they might prefer targets dissimilar to distracting elements. If these distracting elements change predictably over time (e.g., the number of bluish distractors decreases while that of reddish ones increases), observers might adjust their target preference accordingly, switching from one target color to the other as it becomes “more unique“.

Related literature:

Mu, Y., Schubö, A., & Tünnermann, J. (2024). Adapting attentional control settings in a shape-changing environment. Attention, Perception, & Psychophysics, 86(2), 404–421.

Bergmann, N., Tünnermann, J., & Schubö, A. (2019). Which search are you on? Adapting to color while searching for shape. Attention, Perception & Psychophysics, 16(3).

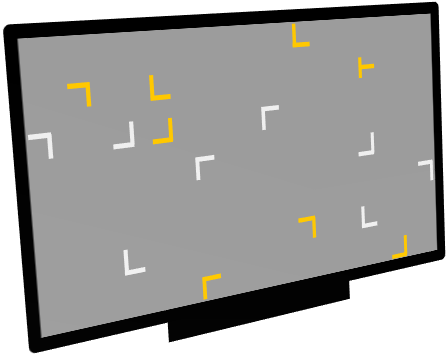

Context learning and visual attention

Principal investigators: Danilo Kuhn, Aylin Hanne, Anna Schubö

In this project, we examine the impact of context information on attention. Learning of context regularities improves the efficiency in localizing a target (Chun & Jiang, 1998) and a distractor (Wang & Theeuwes, 2018; Hanne, Tünnermann & Schubö, 2023). We use additional singleton search and contextual cueing tasks to investigate spontaneous learning of spatial context regularities and its impact on attention guidance. Reward, for instance, sensitizes participants to register repeating context information and to guide attention more efficiently to the target.

Related literature:

Bergmann, N., Tünnermann, J., & Schubö, A. (2020). Reward-predicting distractor orientations support contextual cueing: Persistent effects in homogeneous distractor contexts. Vision Research, 171, 53–63.

Bergmann, N., & Schubö, A. (2021). Local and global context repetitions in contextual cueing. Journal of Vision 21(10):9, 1-17.

Feldmann-Wüstefeld, T., & Schubö, A. (2014). Stimulus homogeneity enhances implicit learning: evidence from contextual cueing. Vision Research, 97, 108–116.

Hanne, A. A., Tünnermann, J. & Schubö, A. (2023). Target templates and the time course of distractor location learning. Sci Rep 13, 1672. https://doi.org/10.1038/s41598-022-25816-9

Schankin, A., & Schubö, A. (2010). Contextual cueing effects despite of spatially cued target locations. Psychophysiology, 47, 717–727.